As a website building company we wouldn't get very far if we didn't also host websites. When our customers click "Publish" in the editor we distribute their website files across our own global server network. While many website builders use 3rd-party CDN providers for this task, we've built our own system. If you work in tech, you might be rolling your eyes already.

I've never read about another company doing this so I thought I'd share our experience with those who might find themselves in a similar situation or are simply curious about this type of thing.

This is the story of how and why we built our own specialized, globally distributed, high-speed, SSL cetificate issuing, auto-scaling, auto-failover, hosting network with a 100% cache hit-rate on all sites.

Our website rendering engine is running on React. When a website is published on our platform, we turn the React component into static HTML and CSS. All published Umso sites are (mostly) static sites. When we first started we simply hosted all those files on a single AWS EC2 instance in the Oregon region. There was no load balancing, no CDN, or anything else beyond a server returning some html, css and media files.

Why even run a server if we just have static files? Why not use one of the many static site hosting services? There's one thing that makes us different from most people hosting static sites: we have thousands of them. And with those thousands of websites come thousands of domains and SSL certificates.

Manually setting up domains or managing certificates was never an option, and other hosting solutions weren't built for so many sites. Therefore our very first website server ran a custom Golang webserver with a plugin that would automatically issue LetsEncrypt certificates.

As our company matured we wanted to provide our customers with faster websites and higher reliability than a single server in a single location could offer. However, we didn't want to deal with the effort of building and maintaining our own hosting network so we started looking for existing solutions.

Because we wanted to host static files with quick world-wide access, CDNs seemed to fit the bill. That's until we started trying them out. There's a lot of cool edge-computing, global network, cloud companies now, but the landscape was a little less exciting 6 years ago.

We looked into almost every option on the market and even tried some out in production for a while. However we'd always run into limitations sooner or later. And that's because we wanted a lot of things: pricing to support thousands of sites, quick invalidations, robust API, high cache hit-rates, willingness to support our use-case and flexible configuration options.

I could write an entire blog post about our CDN adventures but now is not the time. However I do want to bring up one point which I believe is an issue across the entire software industry, and that's approachability.

We're a small company with no outside funding or fancy connections in silicon valley and that means for a lot of large companies, we're simply not a worthwhile customer. However, in some industries (CDNs included) the large companies have the better product. Or simply the better track record.

If you're looking for a profitable startup idea, maybe have a look at where a successful company has grown so much that it's no longer catering to the smaller business. But then maybe don't, who am I to say?

Either way, we built our own CDN. Sort of.

We like to keep things as simple as possible so you might even find our setup a little underwhelming. Do we run our own hardware? omg no. Serverless? no. Edge computing? kind of but not really. Docker? never tried it. I don't mean to be dismissive. My point is: you can get very far with a few simple tools.

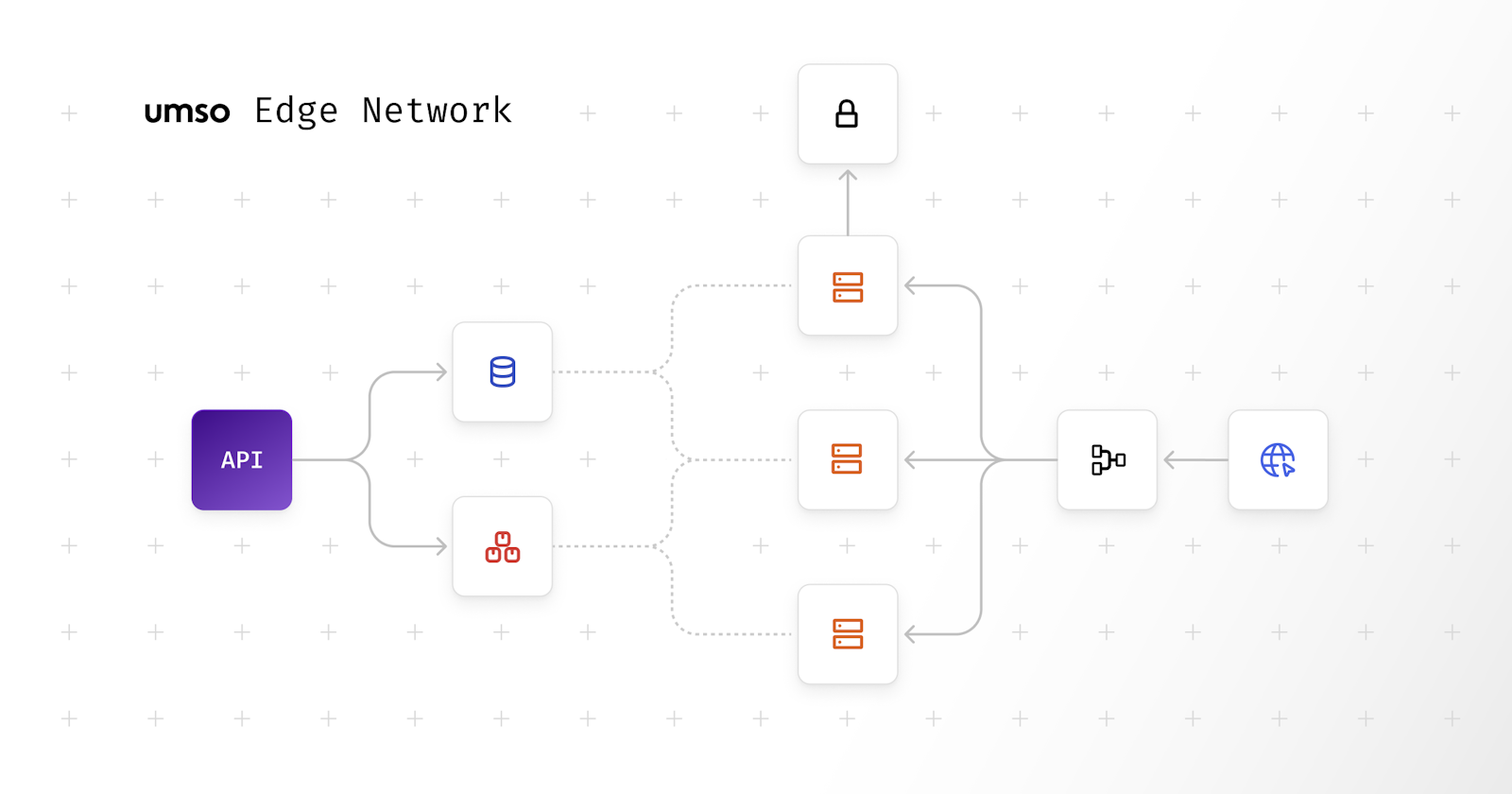

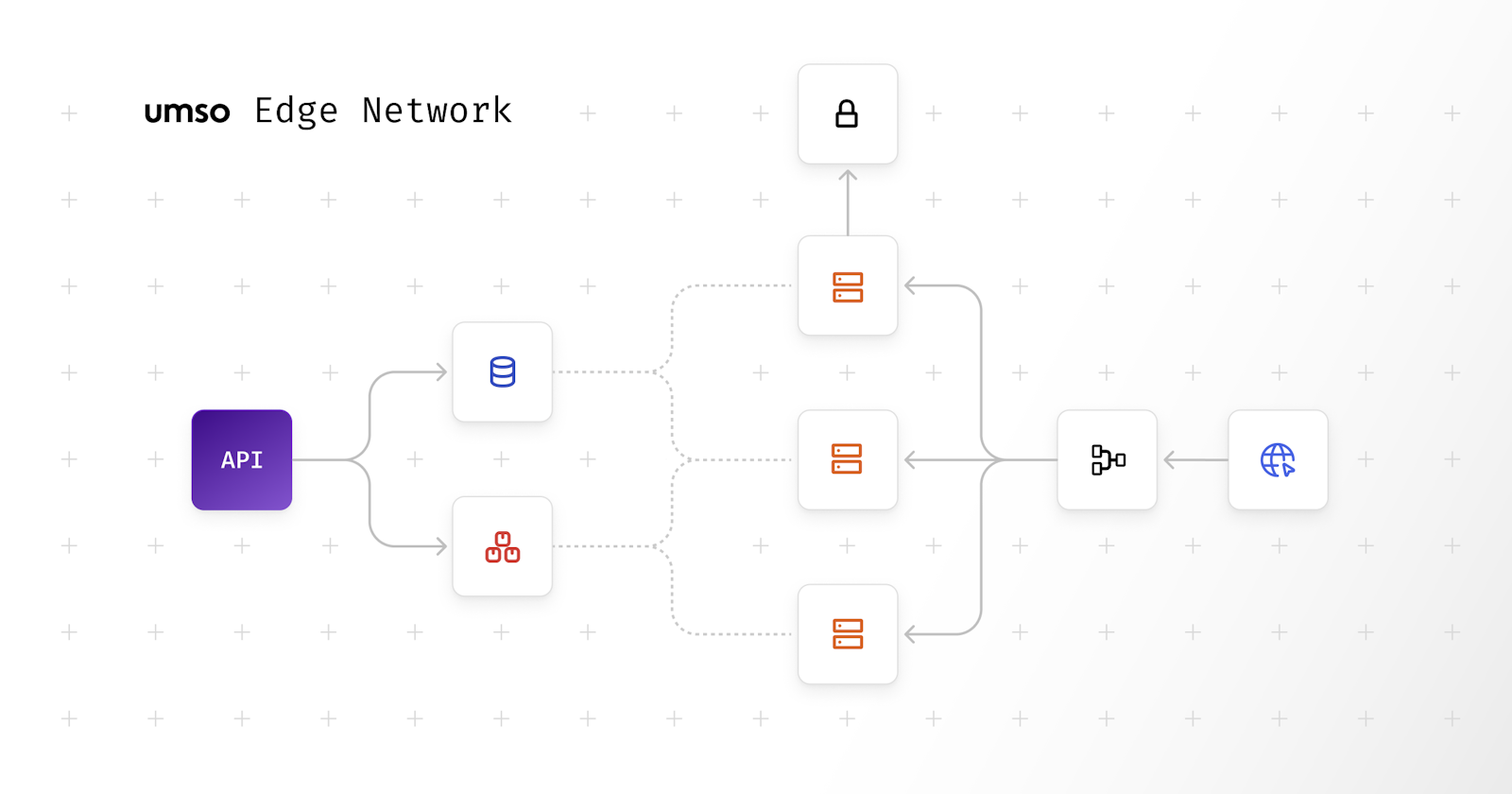

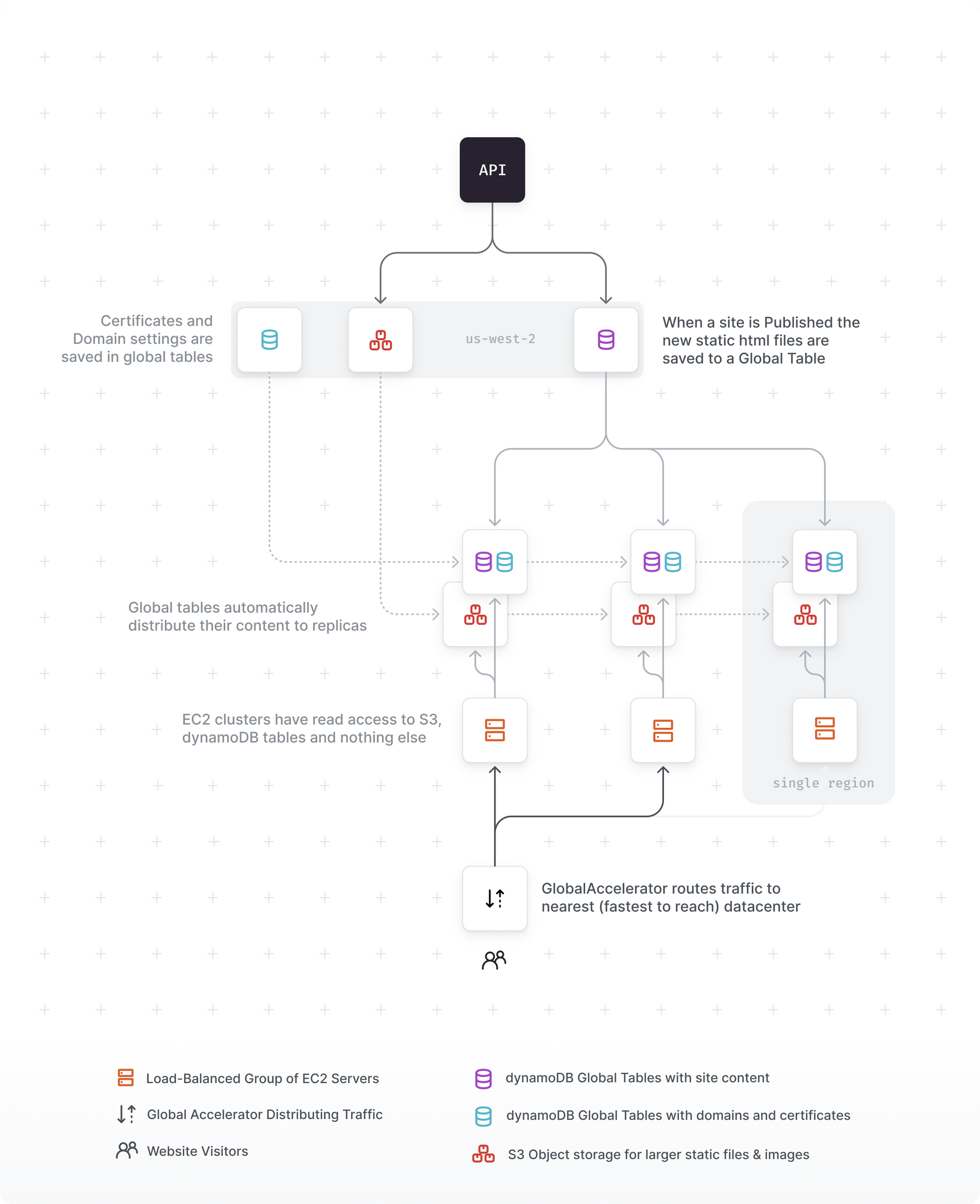

Our entire system runs on AWS. We use Global Accelerator to provide our customers with an Anycast IP address which routes visitors to their nearest datacenters. We have four identical server setups in Canada, the USA, Europe and India.

They all consist of a Network Load Balancer distributing traffic to an autoscaling fleet of EC2 instances running our own custom Golang server. Our Go binaries (some 15mb) are built with GitHub actions and deployed via CodeDeploy. On average it takes less than two minutes from merging a PR to all of our instances running the latest code. We never SSH into our instances.

Each location also has a handful of DynamoDB tables and S3 buckets. Together with some security groups and IAM rules, those are all the resources we have in our hosting locations. Of course our website building product depends on a lot more infrastructure. The setup I'm talking about here only concerns the site hosting aspect.

There is no central server in our content delivery network. Each of the four server locations has all the data to function independently. When a site is published we distribute a copy of it to each datacenter. If our API and central Database go offline, our websites will still be available. If three of our network regions go offline, all of our websites will still be available.

Because we're a small team and don't like complexity, we didn't want to deal with database management in four different regions and we also didn't want to handle the data distribution ourselves. And we found the perfect tool to do it all for us: DynamoDB Global Tables.

While there are services that offer many databases with global replication, we liked DynamoDB for two reasons: it's serverless and it's cheap. While it wouldn't usually be regarded as a cheap service, it is for our use-case. We can cache files on the EC2 servers to keep our requests down and the table size is almost neligible.

As far as local caching goes, we run a distributed caching service as part of our main Golang program. All the servers in the same region share an in-memory cache which means that the cache size also scales linerary with server load in any region.

Our initial goal was to improve the speed and reliability of our customers sites. Of course there were hiccups along the way while we figured things out but for a few years now our system has been running really solid.

We're monitoring our uptime and server speed with a third-party tool called Hyperping which makes requests to our servers and measures their speed and response. Our uptime of 99.998% means that over the period of 30 days we would have 52s of downtime. That's a really high availability and better than both Squarespace and Wix. We also make our uptime and response speed public on our status page.

While our speed and uptime numbers could also be achieved with a third-party CDN or hosting solution, our own system has a lot of additional benefits.

100% Cache HIT-Rate: Because the edge servers get all necessary site files whenever a site is updated, they don't need to make any requests to an origin server. If a file is not in memory, it'll be loaded from the local DB, which takes no longer than 10ms on average.

Big CDNs are good at having high cache hit rates when few files are accessed by many people, but don't do very well with thousands of small files that are less frequently accessed. For our customers just starting out, every pageview counts so it's great that all of them are fast as well.

Instant site publishes: When there's new content, we don't have to invalidate the existing content, we just overwrite it. That means new content is available as soon as it has been globally replicated which takes less than two seconds on average.

Flexibility, Transferability: We have full control over the code of our server which means we can add new functionality quite easily, such as implementing site & page passwords or server-side analytics. While it certainly is easiest to run all of our infrastructure within AWS, we could also host our edge servers with another provider if we wanted to.

We own it: We don't own the infrastructure, but that's been mostly commoditized. We do own the system. That means we won't have to deal with sales teams, worry about deprecation, our provider pivoting, or anything that comes with buying a service from a third-party provider.

EU-endpoint: Because we already have a server setup in the EU, we added another endpoint which European customers can use to direct all of their traffic only to our EU servers. This negates the performance benefit that comes with a global network but it gives some customers peace of mind when it comes to EU privacy laws.

Cost: You might think that running all this infrastructure ourselves would be very expensive, especially with AWS egress pricing. However most of this is offset by the fact that we don't pay anything extra for additional sites. We essentially just pay for egress traffic and server load. With our code being highly optimized and our servers scaling down during slow periods, we end up paying not a ton more than we would with a third-party provider.

It took a lot of time to figure out how to build all of this and how to make it work together. While we love what we have now, we'd definitely look for a third-party provider before building anything if we hadn't already. All we'd need would be: unlimited sites, flexible pricing, high cache hit rates, instant invalidations, flexible configuration, a solid and well documented API, root domain support, and a long history of reliability, without having to drag ourselves through a long sales process.

There's also the issue of having to maintain it all. However, because we have kept the number of moving parts as low as possible, there's not a lot to do. It would be great to just pay someone else to deal with it entirely. However, we would still need to make our origin server available at all times if we used a CDN. If there was a service that would handle all of it for us, we'd still have other infrastructure to maintain. We're an always-online company either way.

We did the not-very-startup thing and built something ourselves which is already being provided by a giant industry. It turned out to be a lot less complicated than one might think. Somehow this worked out well for us and I hope this article might inspire you to go down an unusual path and learn something new as well.

If you find yourself in a similarlish situation and want to run your own global hosting network, you might get very far with just GlobalAccelerator, DynamoDB Global tables and Caddy. If any of this has been helpful or if you have any questions, feel free to reach out to me on Twitter. Thanks for reading!

Quick Q&A:

We use cookies to improve user experience. Choose what cookie categories you allow us to use. You can read more about our Cookie Policy by clicking on Cookie Policy below.

These cookies enable strictly necessary cookies for security, language support and verification of identity. These cookies can’t be disabled.

These cookies collect data to remember choices users make to improve and give a better user experience. Disabling can cause some parts of the site to not work properly.

These cookies help us to understand how visitors interact with our website, help us measure and analyze traffic to improve our service.

These cookies help us to better deliver marketing content and customized ads.